Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

AWS is expanding the capabilities of its cloud database portfolio, while at the same time reducing costs for enterprises.

In a session at AWS re:invent 2024 today, the cloud giant outlined a series of cloud database innovations. These include the new Amazon Aurora DSQL distributed SQL database, global tables for the Amazon DynamoDB NoSQL database, as well as new multi-region capabilities for Amazon MemoryDB. AWS also detailed its overall database strategy and outlined how vector database functionally fits in to help enable generative AI applications. Alongside the updates, AWS also revealed a series of price cuts, including lowering Amazon DynamoDB on-demand pricing by up to 50%.

While database functionality is interesting to database administrators, it is the practical utility that cloud databases offer that is driving AWS’ innovations. The new features are all part of an overall strategy to enable increasingly large and sophisticated workloads across distributed deployments. The AWS cloud database portfolio is also very focused on enabling real-time demanding workloads. During today’s keynote, multiple AWS users including United Airlines, BMW and the National Football League talked about how they are using AWS cloud databases.

“We are driven to innovate and make databases effortless for you builders, so that you can focus your time and energy in building the next generation of applications,” Ganapathy (G2) Krishnamoorthy, VP of database services at AWS, said during the session. “Database is a critical building block in your applications, and it’s part of the bigger picture of our vision for data analytics and AI.”

How AWS is rethinking the concept of distributed SQL with Amazon Aurora DSQL

The concept of a distributed SQL database is not new. With distributed SQL, a relational database can be replicated across multiple servers, and even geographies, to enable better availability and scale. Multiple vendors including Google, Microsoft, CockroachDB, Yugabyte and ScyllaDB all have distributed SQL offerings.

AWS is now rethinking how distributed SQL architecture works in an attempt to accelerate reads and writes for always-available applications. Krishnamoorthy explained that, unlike traditional distributed databases that often rely on sharding and assigned leaders, Aurora DSQL implements a no single leader architecture, enabling limitless scaling.

The new database is built on the Firecracker micro virtual machine technology that powers the AWS Lambda serverless technology. Amazon Aurora DSQL runs as a small, ephemeral microservice that allows independent scaling of each system component — query processor, transaction system and storage system.

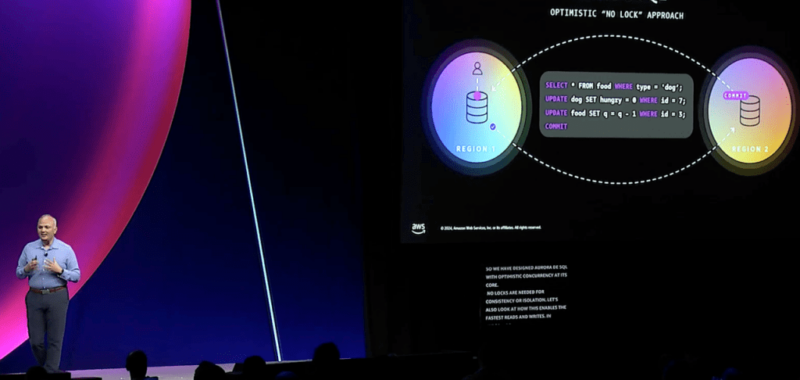

Optimistic concurrency comes to distributed SQL cloud databases

With any distributed database technology, there is always a concern about consistency across instances. The concept of eventual consistency is common in the database space, which means that there can be some latency in maintaining consistency.

It’s a challenge that AWS is aiming to solve with an approach Krishnamoorthy referred to as “optimistic concurrency.” In this approach, all database actions run locally and only the transaction commit goes across the region. This ensures that a single transaction can never disrupt the whole application by holding on to too many logs.

“We have designed Aurora DSQL with optimistic concurrency at its core, no locks are needed for consistency or isolation,” said Krishnamoorthy.

How Amazon DynamoDB global tables improves consistency

AWS is also bringing strong consistency and global distribution to its DynamoDB NoSQL database.

DynamoDB global tables with strong consistency allows data written to a DynamoDB table to be persisted across multiple regions synchronously. Data written to the global table is synchronously written to at least two regions, and applications can read the latest data from any region. That enables mission-critical applications to be deployed in multiple regions with zero changes to the application code.

Among the many AWS users that are particularly enthusiastic about the new feature is United Airlines. In a video testimonial at AWS re:invent, the airlines’ manage director Sanjay Nayar explained how his organization uses AWS with over 2,500 database clusters storing more than 15 petabytes of data, running millions of transactions per second. Those databases power multiple mission critical aspects of the airline’s operations.

United Airlines is using Amazon DynamoDB global tables as part of the company system for seating.

“We opted for DynamoDB global tables as a primary system for seating assignments due to its exceptional scalability and active-active, multi region, high availability, which offers single digit millisecond latency,” said Nayar. “This lets us quickly and reliably write and read seat assignments, ensuring we always have the most up to date information.”

Amazon MemoryDB goes multi-region and helps the NFL build gen AI apps

The Amazon MemoryDB in-memory database is also getting a distribution update with new multi-region capabilities.

While AWS offers vector support in a series of its cloud databases, according to Jeff Carter, VP for relational databases, non-relational databases and migration services at AWS, Amazon MemoryDB has the highest level of performance. This is why the NFL (National Football League) is using the database to help build out gen AI-powered applications.

“We’re using MemoryDB for both short term memory during the execution of the queries and long term memory for saving successful queries to the vector store to be leveraged on future searches,” said Eric Peters, NFL’s director for media administration and post production. “We can use these saved memories to guide new queries to get the results from the next gen stats API quicker and more accurately as time passes, these successful user memories accumulate to make the system smarter, faster and ultimately, a lot cheaper.”

Source link