Invoke has unveiled a new breed of tool that enables game companies to use AI to power image generation.

It’s one of many such image generation tools that have surfaced since the launch of OpenAI’s ChatGPT-3.5 in November 2022. But Invoke CEO Kent Keirsey said his company has tailored its solution for the game industry with a focus on the ethical adoption of the technology via artist-first tools, safety and security commitments and low barriers to entry.

Keirsey said Invoke is currently working with multiple triple-A studios and has been pioneering this tech to succeed at the scale of big enterprises. I interviewed Keirsey at Devcom in Cologne, Germany, ahead of the giant Gamescom expo. He also gave a talk at Devcom on the intersection of AI and games.

Here’s an edited transcript of our interview.

Join us for GamesBeat Next!

GamesBeat Next is connecting the next generation of video game leaders. And you can join us, coming up October 28th and 29th in San Francisco! Take advantage of our buy one, get one free pass offer. Sale ends this Friday, August 16th. Join us by registering here.

Disclosure: Devcom paid my way to Cologne, where I moderated two panels.

GamesBeat: Tell me what you have going on.

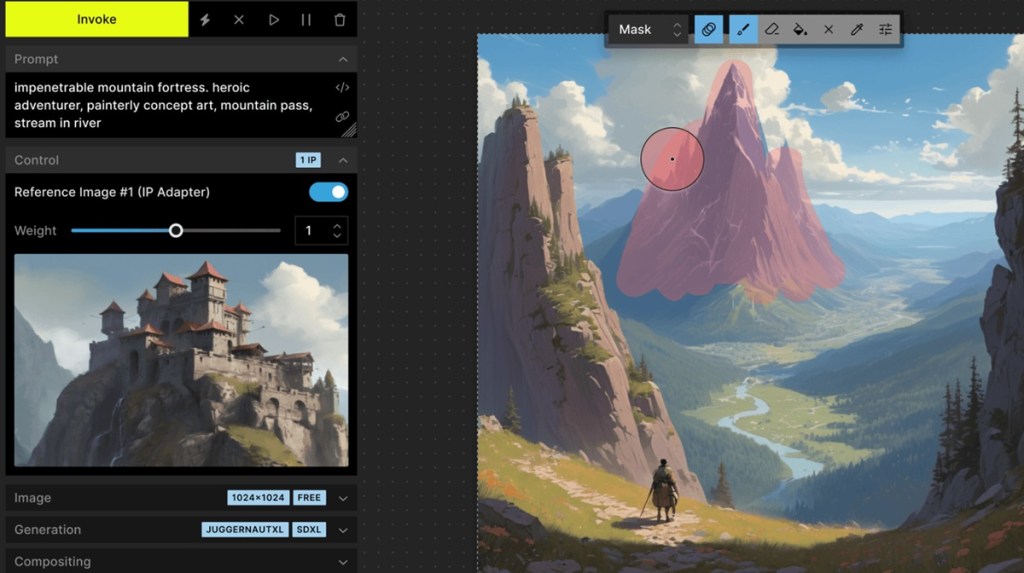

Kent Keirsey: We focus on generative AI for game development in the image generation space. We’re focused on everything from concept art to marketing assets, the full pipeline of image creation, regardless of how early in the dev process. In the middle, generating textures and assets for the game, or after the fact. Our focus is primarily on controllability and customization. We have the ability for an artist to come in and sketch, draw, compose what they want to see, and AI just helps them finish it, rather than more of a “push button, get picture” type of workflow where you roll the dice and hope it produces something usable.

Our customers include some of the biggest publishers in the world. We’re actively in production deployments with them. It’s not pilots. We’re actually rolling out across organizations. We have some interesting things coming down the pike around IP and managing some of that stuff inside of the tool.

Biggest thing for us is we’re focused on the artist as the end user. It’s not intended to replace them. It’s a tool for them. They have more control. They can use it in their workflow. We’re also open source. We just partnered with the Linux Foundation last week for the Open Model Initiative. Releasing open models that are permissively licensed along with our software. Indie users, as well as individuals, can use it, own their assets and not have any concerns about having to compete with AI.

GamesBeat: What kind of art does this create? 2D or 3D?

Keirsey: 2D art right now. The way I think about 3D, the outputs that are coming from 3D models can be fed with images or text. But the outputs themselves, the mesh, aren’t as usable. It takes a lot of work for a 3D artist to go in and fix issues rather than just starting from scratch. The other piece there, when a 2D artist is doing a single view and passing that to a 3D model, it’ll produce a multi-view. It’ll do the full orthos, if you will. But very often it doesn’t make the same decisions an artist would if they were to do those things.

We’re partnering with some of the 3D modelers in the space and working on technologies that would allow the 2D concept artist to preview that turnaround before it goes to a 3D model, make those iterations and changes, and then pass that to the 3D modeler. But that’s not live yet. It’s just the direction it’s going. The way to think about that is, Invoke is the place where that 2D iteration will happen. Then the downstream models will take that and run with it. I anticipate that will happen with video as well.

GamesBeat: Is there a way you would compare this to a Pixar workflow?

Keirsey: RenderMan, something like that?

GamesBeat: The way they do their storyboards, and then eventually get 2D concepts that they’re going to turn into 3D.

Chisam: You could look at it that way. Our tool is focused a lot more on the individual image. We’re not doing anything around narratives. You’re not doing a sequence design inside of our tool. Each frame is effectively what you’re building and composing in the tool. We focus on going deep on the inference of the model. We’re a model agnostic tool. It means a customer can train their own model and bring it to us and we’ll run it as long as it’s an architecture that we support.

You can think of the class of models we work with as focused purely on multimedia. Just the open source, open weights image generation models that exist. Stability is in the ecosystem. It’s in the open source space we originated from, but there are new entrants to that market, and people who are releasing model weights that effectively would, like Stable Diffusion, be open and allow you to run it in an inference tool like Invoke.

Invoke is the place you would put the model. We have a canvas. We have workflows. We’re built for professionals. They’re able to go in on a canvas, draw what they want, and have the model interpret that drawing into the final asset. They can actually go as detailed as they want and have the AI finish the rest. Because they can train the model, they can inject it with their style. It can be any type of art. It’s style-specific.

If you have a game and you’re going for aesthetic differentiation – if that’s how you’re going to bring your product to market – then you need everything to fit that style. It can’t be generic. It can’t be the crap that comes out of Midjourney where it feels very same, unless you really push it out of its comfort zone. Training a model allows you to push a model to where you want it to go. The way I like to think about it, the model is a dictionary. It understands a certain set of words. Artists are often fighting what it knows to get what they’re thinking of.

By training the model they change that dictionary. They redefine certain terms in the way they would define them. When they prompt, they know exactly how it’s going to interpret that prompt, because they’ve taught the model what it means. They can say, “I want this in my style.” They can pass it a sketch and it becomes a lot more of a collaborator in that sense. It understands them. They’re working with it. It’s not just throwing it over the fence and hoping it works. It’s iteratively going through each piece and part and changing this element and that element, going in and doing that with AI’s assistance.

GamesBeat: Do artists have a strong preference about drawing something first, versus typing in prompts?

Keirsey: Definitely. Most artists would say that they feel like they don’t express themselves the same way with words. Especially when it’s a model that’s some other person’s dictionary, some other person’s interpretation of that language. “I know what I want, but I’m having a hard time conveying what that means. I don’t know what words to pick up to give it what’s in my head.” By being able to draw and compose things, they can do what they want from a compositional perspective. The rest of that is stylistically applying the visual rendering on top of that sketch.

That’s where we fit in. Helping marry the model to their vision. Helping it serve them as a tool, rather than “instead of” an artist. They can import any sketch drawn from outside of the tool. You can also sketch it directly inside the canvas. You have different ways of interacting with it. We work side by side with something like Photoshop, or we can be the tool they do all the iteration in. We’re going to be releasing, in the coming weeks, an update to our canvas that extends a lot of that capability so that there are layers. There’s a whole iterative compositing component that they’re used to in other tools. We’re not trying to compete with Photoshop. We’re just trying to provide a suite of tools that they might need for basic compositing tasks and getting that initial idea in.

GamesBeat: How many hours of work would you say an artist would put in before submitting it to the model?

Keirsey: I have a quote that comes to mind from when we were talking to an artist a week or two ago. He said that this new project he was working on would not be possible without the assistance of Invoke. Normally, if he was doing it by hand, it would take him anywhere from five to seven business days for that one project. With the tool he says he’s gotten it down to four to six hours. That’s not seconds. It’s still four to six hours. But he has the control that really allows him to get what he wants out of it.

It’s exactly what he envisioned when he went in with the project. Because it’s tuned to the style he’s working in, he said, “I can paint that. All that stuff it’s helping with, I could do it. This just helps me get it done faster. I know exactly what I want and how to get it. I’m able to do the work in a fraction of the time.”

That reduction of the amount of effort it takes to get to the final product is why there’s a lot of controversy in the industry. It’s a massive productivity enhancement. But most people are making the assumption that it’s going to go to the limit of, it’ll take three seconds to get to the final picture. I don’t think that will ever be the case. A lot of the work that goes into it is artistic decision-making. I know what I want to get out of it, and I know I have to work and iterate to get to that final piece. It’s rare that it spits out something where it’s perfect and you don’t need to do any more.

GamesBeat: How many people are at the company now?

Keirsey: We have nine employees. We started the company last year. Founded in February. Raised our seed round in June, $3.7 million. We launched the enterprise product in January. We’ll probably be moving toward a series A here soon. But we’re focused on–games is our number one core focus, but we’ve seen demand from other industries. I just think that there’s so much artistic motivation, a need for what we provide in this industry. We see a lot of friction in gaming, but we also see a lot of what it can do when you get somebody through that friction and through the learning curve of how to use these tools. There’s a massive opportunity.

GamesBeat: How many competitors are there in your space so far?

Keirsey: A lot. You can throw a rock and hit another image generator. The difference between what we do and everyone else is we’re built for scale. Our self-hosted product, which is open source, is free. People can download it and run it on their own hardware. It’s built for an individual creator. That has been downloaded hundreds of thousands of times. It’s one of the top GitHub repos. It’s on GitHub as an open source project.

Our business is built around the team and the enterprise. We don’t train on our customers’ data. We are SOC 2 compliant. Large organizations trust us with their IP. We help them train the model and deploy the model with all the features that you would need to roll that out at scale. That’s where our business is built. Solving a lot of the friction points of getting it into a secure environment that has IP considerations. When you have unreleased IP and you’re a big triple-A publisher, you vet every single thing that touches those assets. It might be the next leak that gets your game online. Because we’re part of that game development process, we do have a lot of that core IP that’s being pushed into it. It goes through every ounce of legal and infosec review that you can get in the business.

I would argue that we’re probably the best or the only one that has solved all these problems for enterprises. That’s what we focused on as one of the core concerns when we were building our enterprise product.

GamesBeat: What kind of questions do you get from the lawyers about this?

Keirsey: We get questions around, whose data is it? Are you training on our data? How does that work? It’s easy for us because we’re not trying to play any games. It’s not like we have weasel words in the contract. It’s very candidly stated. We do not train image generation models on customer content, period. That’s probably one of the biggest friction points that lawyers have right now. Whose data is it?

We eliminate a lot of the risk because we’re not a consumer-facing application. We don’t have a social feed. You don’t go into the app and see what everyone else is generating. It’s a business product. You log in and you see your projects. You have access to these. These are the ones you’ve been generating on. It’s just business software. It’s positioned more for that professional workflow.

The other piece lawyers bring up very often is copyright on outputs. Whose images are these? If we generate them, do we have ownership of that IP? Right now the answer is, it’s a gray area, but we have a lot of reason to believe that with certain criteria met for how an image is generated, you will get copyright over those assets.

The thought process there is, in 2023 the U.S. Copyright Office said that anything that comes out of an AI system that was done with a text prompt–that doesn’t matter if it’s ChatGPT or an image generator. You do not get copyright on that. But that was not taking into consideration any of the stuff that hadn’t been built yet, which allows more control. Things like being able to pass them your sketch and having it generate that. Things like being able to go in on a canvas and iterate, tweak, poke, and prod. The term under copyright law is “selection and arrangement.” That is what our canvas allows for. It allows for the artistic process to evolve. We track all of that. We manage all of that in our system.

We have some exciting stuff coming up around that. We’re eager to share it when it’s ready to share. But that’s the type of question we get, because we’re thinking about that. Most companies that talk with the legal team are just trying to get through the meeting, rather than us having an interesting conversation about what is IP and how we can be a partner. Just us having perspectives on all that means we’re a step ahead of most competitors. They’re not thinking about it at all, frankly. They’re just trying to sell the product.

GamesBeat: I’ve seen companies that are trying to provide a platform for all the AI needs a company might have, rather than just image generation or another specific use case. What do you think about that approach?

Keirsey: I would be very skeptical of anyone that is more horizontal than we already are in the image generation space. The reason for that is, each model architecture has all of these sidecar components that you have to build in order to get the type of control we’re able to offer. Things like control net models, IP adapter models, all of those sit alongside the core image generation tool. The level of interaction we’ve built from an application perspective typically wouldn’t be something that a more horizontal tool like an AI generator would go after. They would probably have a very basic text box. They might have a couple of other options. They won’t have the extensive workflow support and real customized canvas that we’ve built.

Those tools, I think, compete with something like–does an organization pick Dall-E, Midjourney, or that? They’re just looking for a safe image generator. But if you’re looking for a real, powerful, customized solution for certain parts of the pipeline, I don’t think that would solve it.

If you think about a lot of the image generators out in the industry right now, they take a workflow that uses certain features in a certain way, and then they just sell that one thing. It solves one problem. Our tool is the entire toolkit. You can create any of these workflows that you want. If you want to take a sketch that you have and have it turn into a rendered version of that sketch, you can do that. If you want to take a rendering from something like Blender or Maya and have it automatically do a depth estimation and generate on top of that, you can do that. You can combine those together. You can take a pose of somebody and create a new pose. You can train on factions and have it generate new characters of that faction. All of that is part of the broader image generation suite of tools.

Our solution is effectively–if you think about Photoshop, what it did for digital editing, that’s what we’re doing for AI-first image creation. We’re giving you the full set of tools, and you can combine and interact with all of those in whatever way you see fit. I think it’s easier to sell, and probably to use, if you’re just looking for one thing. But as far as the capabilities that would service a broader organization, large organizations and enterprises, the ones that are making double-A and triple-A games, they’re looking for something that does more than just one thing.

They want that model to service all of those workflows as well. It’s a model that understands their IP. It understands their characters and their style. You can imagine that model being helpful earlier in the pipeline, as they’re concepting. You can imagine it being useful if they’re trying to generate textures or do material generation on top of that. When 3D comes, they’ll want that IP to help generate new 3D models. Then, when you get to the marketing, key art and all the stuff you want to make at the end when you release or do live ops, all that IP that you’ve built into the model is effectively accelerating that as well. You have a bunch of different use cases that all benefit from sharing that core model.

That’s how the bigger triple-As are looking at it. The model is this reusable dictionary that helps support all those generation processes. You want to own that. You want that to be your IP as a company. We help organizations get that. They can train it and deploy it. It’s theirs.

GamesBeat: How far along on your road map are you?

Keirsey: We’ve launched. We’re in-market. We’re iterating and working on the product. We have deployed into production with some of the bigger publishers already. We can’t name anyone specific. Most organizations, even though we have an artist-forward process, because of the nature of this industry–it’s extremely controversial. We have individual artists that are champions of our tool, but they feel like they can’t be champions of the tool vocally to other people because of their social network. It’s very hard.

It’s a difficult and toxic environment to have a nuanced conversation on many topics today. This is one of those. That’s why we focus a lot on enabling artists and trying to show that–with what we’re doing here at Devcom, that’s why we focus on showing artists what is possible. We spoke with one person earlier today. She said, “I think most artists are afraid that this is going to replace them. I wish that there were tools that would help us rather than replace us.” That’s what we’re building.

When they see it and interact with it, there’s a sense of hope and optimism. “This is just another tool. This is something I could use. I can see myself using it.” Until you have that realization, the big fear of your skills being irrelevant, your craft no longer mattering, that’s a very dark place. I understand the feedback that most people have.

I mentioned that we’re spearheading the Open Model Initiative that was announced at the Linux Foundation last week. The goal of that is training another open model that solves for some of the problems, gives artists more control, but keeps up to date with what the largest closed model companies are doing. That’s the biggest challenge right now. There’s an increasing desire for AI companies to close up and try to monetize as quickly as they can. That steals a lot of the ability for an artist to own their IP and control their own creative process. That’s what we’re trying to support with the work of the Open Model Initiative. We’re excited for that as we near the end of the year.

GamesBeat: Do you see your output in things that have been finished?

Keirsey: Yes. The beauty of what we do, because we’re helping artists use this, it’s not crap that people are looking at and saying, “Oh, I see the seventh finger. This looks off. The details are wrong.” An artist using this in their pipeline is controlling it. They’re not just generating crap and letting it go. That means they have the ability to generate stuff that can be produced, published, and not get criticized as fake, phony, cheap art. But it does accelerate their pipeline and help them ship faster.

GamesBeat: Where are you based now?

Keirsey: We’re remote, but I’m based in Atlanta. We have a few folks in Atlanta, a few folks in Toronto, and one lonely gentleman on an island called Australia.

Disclosure: Devcom paid my way to Cologne, where I moderated two panels.

Source link